SWE-bench is a benchmark for evaluating large language models on real world software issues collected from GitHub. Given a codebase and an issue, a language model is tasked with generating a patch that resolves the described problem. SWE-bench Verified is a human-validated subset that more reliably evaluates AI models’ ability to solve issues. International Olympiad in Informatics (IOI) competition features standardized and automated grading.

| Model | SWE-bench | 🏆 IOI | Organization | License | Date | Agent |

|---|---|---|---|---|---|---|

79.2 | 26.3 | Anthropic | Proprietary | 2026-02-05 | mini-SWE-agent | |

75.2 | 39.1 | Proprietary | 2025-12-17 | mini-SWE-agent | ||

74.4 | 23.6 | Anthropic | Proprietary | 2025-11-24 | mini-SWE-agent | |

74.2 | 38.8 | Proprietary | 2025-11-18 | mini-SWE-agent | ||

| GPT-5.2 (high) | 71.8 | 54.8 | OpenAI | Proprietary | 2025-12-11 | mini-SWE-agent |

| Grok 4 | 70.6 | 26.2 | xAI | Proprietary | 2025-07-09 | OpenHands |

70.6 | 18.5 | Anthropic | Proprietary | 2025-09-29 | mini-SWE-agent | |

| GPT-5.1 (high) | 70.5 | 21.5 | OpenAI | Proprietary | 2025-11-13 | OpenHands |

| GPT-5 (high) | 70.1 | 20 | OpenAI | Proprietary | 2025-08-07 | OpenHands |

70.1 | 15.2 | Anthropic | Proprietary | 2025-08-05 | OpenHands | |

69.6 | 15.7 | Alibaba | Proprietary | 2025-09-23 | OpenHands | |

68.8 | 6.2 | Anthropic | Proprietary | 2025-10-15 | OpenHands | |

68 | 6.5 | Anthropic | Proprietary | 2025-05-14 | OpenHands | |

67.6 | Anthropic | Proprietary | 2025-05-14 | mini-SWE-agent | ||

67 | Alibaba | Apache 2.0 | 2025-07-22 | OpenHands | ||

66 | DeepSeek | MIT | 2025-08-21 | OpenHands | ||

65.4 | 1.3 | Moonshot | Modified MIT | 2025-07-11 | OpenHands | |

| GPT-5 (medium) | 65 | OpenAI | Proprietary | 2025-08-07 | mini-SWE-agent | |

| GLM-4.5 | 64.2 | Z.ai | MIT | 2025-07-28 | OpenHands | |

| GPT-5 mini | 59.8 | OpenAI | Proprietary | 2025-08-07 | mini-SWE-agent | |

| o3 | 58.4 | OpenAI | Proprietary | 2025-04-16 | mini-SWE-agent | |

| GLM-4.5-Air | 57.6 | Z.ai | MIT | 2025-07-28 | OpenHands | |

53.6 | 17.1 | Proprietary | 2025-05-06 | mini-SWE-agent | ||

52.8 | Anthropic | Proprietary | 2025-02-19 | mini-SWE-agent | ||

51.6 | Alibaba | Apache 2.0 | 2025-07-30 | OpenHands | ||

| GPT-4.1 | 48.6 | OpenAI | Proprietary | 2025-04-14 | OpenHands | |

| o4-mini | 45 | 5.3 | OpenAI | Proprietary | 2025-04-16 | mini-SWE-agent |

41.4 | DeepSeek | MIT | 2025-05-28 | OpenHands | ||

38.8 | 1.7 | DeepSeek | MIT | 2025-03-24 | OpenHands | |

| GPT-5 nano | 34.8 | OpenAI | Proprietary | 2025-08-07 | mini-SWE-agent | |

28.73 | 3.9 | Proprietary | 2025-04-17 | mini-SWE-agent | ||

| GPT-4.1 mini | 23.94 | OpenAI | Proprietary | 2025-04-14 | mini-SWE-agent | |

| GPT-4o | 21.62 | OpenAI | Proprietary | 2024-11-20 | mini-SWE-agent | |

21.04 | Meta | Llama 4 | 2025-04-05 | mini-SWE-agent | ||

13.52 | Proprietary | 2025-02-05 | mini-SWE-agent | |||

9.06 | Meta | Llama 4 | 2025-04-05 | mini-SWE-agent | ||

9 | Alibaba | Apache 2.0 | 2024-11-12 | mini-SWE-agent |

SWE-bench Verified (100 turns)

IOI Benchmark (2024 and 2025 exams)

Chatbot Arena + | OpenHands

👋 Overview

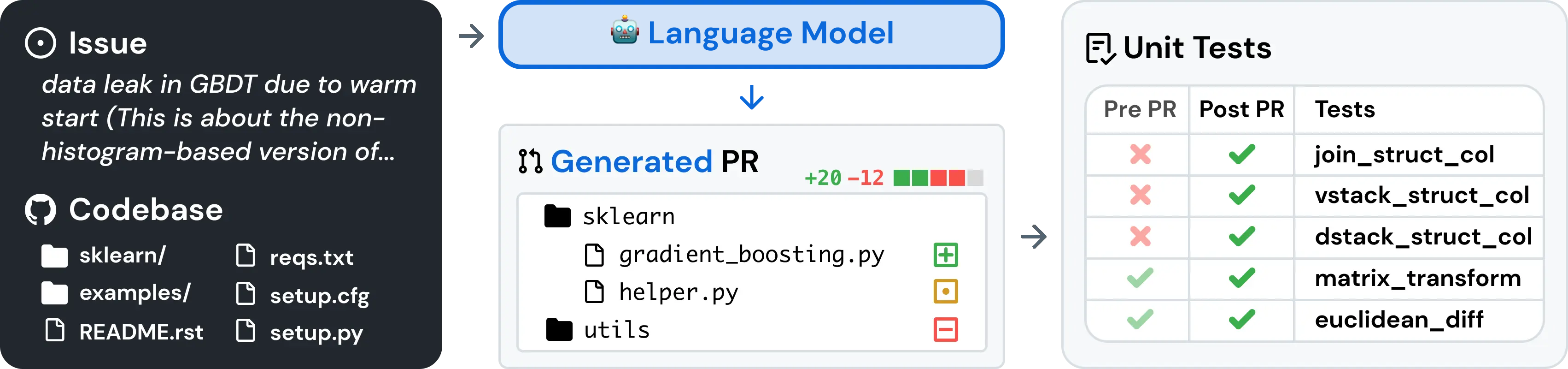

SWE-bench tests AI systems’ ability to solve GitHub issues.

We collect 2,294 task instances by crawling Pull Requests and Issues from 12 popular Python repositories. Each instance is based on a pull request that (1) is associated with an issue, and (2) modified 1+ testing related files.

Per instance, we construct an execution environment (Docker Image) with the repository successfully installed at the commit that the Pull Request is based on. Without the Pull Request’s changes, a number of test(s) fail. After the Pull Request is merged, the same set of test(s) pass. These “Fail-to-Pass” tests are the primary signal for evaluation.

SWE-bench evaluation works as follows. Per task instance, an AI system is given the issue text. The AI system should then modify the codebase in order to resolve the described issues. When the AI system is finished, we run the aforementioned Fail-to-Pass tests to check if the issue was successfully resolved.

Code and data for the following works:

- [ICLR 2025] SWE-bench Multimodal: Do AI Systems Generalize to Visual Software Domains?

- [ICLR 2024 Oral] SWE-bench: Can Language Models Resolve Real-World GitHub Issues?

📰 News

- [Jan. 13, 2025]: We’ve integrated SWE-bench Multimodal (paper, dataset) into this repository! Unlike SWE-bench, we’ve kept evaluation for the test split private. Submit to the leaderboard using sb-cli, our new cloud-based evaluation tool.

- [Jan. 11, 2025]: Thanks to Modal, you can now run evaluations entirely on the cloud! See here for more details.

- [Aug. 13, 2024]: Introducing SWE-bench Verified! Part 2 of our collaboration with OpenAI Preparedness. A subset of 500 problems that real software engineers have confirmed are solvable. Check out more in the report!

- [Jun. 27, 2024]: We have an exciting update for SWE-bench - with support from OpenAI’s Preparedness team: We’re moving to a fully containerized evaluation harness using Docker for more reproducible evaluations! Read more in our report.

- [Apr. 2, 2024]: We have released SWE-agent, which sets the state-of-the-art on the full SWE-bench test set! (Tweet 🔗)

- [Jan. 16, 2024]: SWE-bench has been accepted to ICLR 2024 as an oral presentation! (OpenReview 🔗)

🚀 Set Up

SWE-bench uses Docker for reproducible evaluations. Follow the instructions in the Docker setup guide to install Docker on your machine. If you’re setting up on Linux, we recommend seeing the post-installation steps as well.

Finally, to build SWE-bench from source, follow these steps:

git clone git@github.com:princeton-nlp/SWE-bench.git

cd SWE-bench

pip install -e .

Test your installation by running:

python -m swebench.harness.run_evaluation \

--predictions_path gold \

--max_workers 1 \

--instance_ids sympy__sympy-20590 \

--run_id validate-gold

ℹ️ Note

If using a MacOS M-series or other ARM-based systems, add

--namespace ''to the above script. By default, the evaluation script pulls images (built for Linux) from DockerHub. Adding--namespace ''will cause evaluation images to be built locally instead.

💽 Usage

Evaluate patch predictions on SWE-bench Lite with the following command:

python -m swebench.harness.run_evaluation \

--dataset_name princeton-nlp/SWE-bench_Lite \

--predictions_path <path_to_predictions> \

--max_workers <num_workers> \

--run_id <run_id>

# use --predictions_path 'gold' to verify the gold patches

# use --run_id to name the evaluation run

# use --modal true to run on Modal

This command will generate docker build logs (logs/build_images) and evaluation logs (logs/run_evaluation) in the current directory.

The final evaluation results will be stored in the evaluation_results directory.

⚠️ Warning

SWE-bench evaluation can be resource intensive We recommend running on an

x86_64machine with at least 120GB of free storage, 16GB of RAM, and 8 CPU cores. We recommend using fewer thanmin(0.75 * os.cpu_count(), 24)for--max_workers.If running with Docker desktop, make sure to increase your virtual disk space to ~120 free GB. Set max_workers to be consistent with the above for the CPUs available to Docker.

Support for

arm64machines is experimental.

To see the full list of arguments for the evaluation harness, run:

python -m swebench.harness.run_evaluation --help

✍️ Citation

If you find our work helpful, please use the following citations.

@inproceedings{

jimenez2024swebench,

title={{SWE}-bench: Can Language Models Resolve Real-world Github Issues?},

author={Carlos E Jimenez and John Yang and Alexander Wettig and Shunyu Yao and Kexin Pei and Ofir Press and Karthik R Narasimhan},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=VTF8yNQM66}

}

@inproceedings{

yang2024swebenchmultimodal,

title={{SWE}-bench Multimodal: Do AI Systems Generalize to Visual Software Domains?},

author={John Yang and Carlos E. Jimenez and Alex L. Zhang and Kilian Lieret and Joyce Yang and Xindi Wu and Ori Press and Niklas Muennighoff and Gabriel Synnaeve and Karthik R. Narasimhan and Diyi Yang and Sida I. Wang and Ofir Press},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

url={https://openreview.net/forum?id=riTiq3i21b}

}