LLM Course

The LLM course is divided into three parts: 🧩 LLM Fundamentals is optional and covers fundamental knowledge about mathematics, Python, and neural networks. 🧑🔬 The LLM Scientist focuses on building the best possible LLMs using the latest techniques. 👷 The LLM Engineer focuses on creating LLM-based applications and deploying them.

Awesome LLM

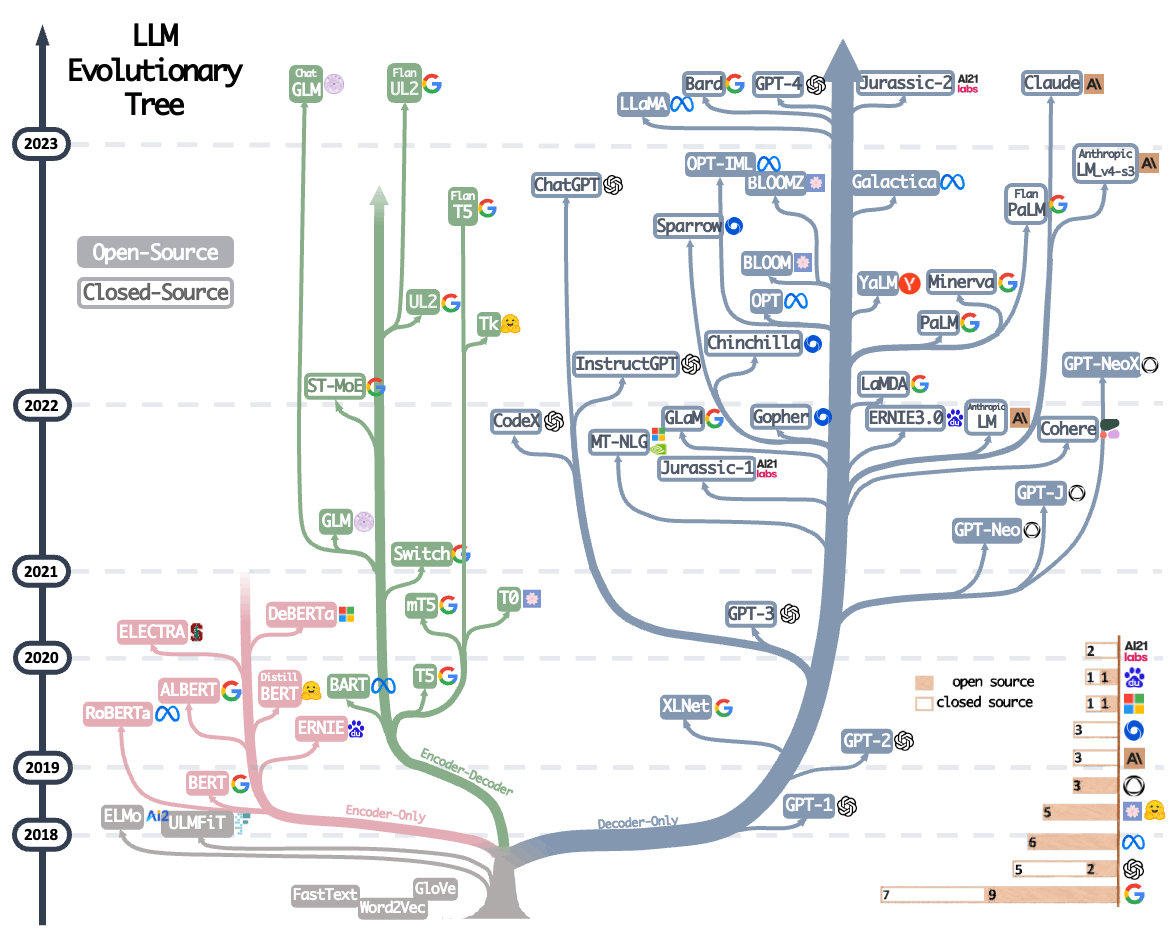

Large Language Models (LLMs) have taken the Whole World by storm. Here is a curated list of papers about large language models, especially relating to ChatGPT. It also contains frameworks for LLM training, tools to deploy LLM, courses and tutorials about LLM and all publicly available LLM checkpoints and APIs.

Reasoning with Foundation Models

We organize the current foundation models into three categories: language foundation models, vision foundation models, and multimodal foundation models. Further, we elaborate the foundation models in reasoning tasks, including commonsense, mathematical, logical, causal, visual, audio, multimodal, agent reasoning, etc. Reasoning techniques, including pre-training, fine-tuning, alignment training, mixture of experts, in-context learning, and autonomous agent, are also summarized.