SGLang v0.2

❖ Through our operational experiences and in-depth research, we’ve continuously enhanced the underlying serving systems for Chatbot Arena, spanning from the high-level multi-model serving framework, FastChat, to the efficient serving engine for LLMs and VLMs, SGLang Runtime (SRT). While existing options like TensorRT-LLM, vLLM, and Hugging Face TGI have their merits, we found them sometimes hard to use, difficult to customize, or lacking in performance. This motivated us to create a serving engine that is not only user-friendly and easily modifiable but also delivers top-tier performance.

FlashAttention-3

❖ Attention, as a core layer of the ubiquitous Transformer architecture, is a bottleneck for large language models and long-context applications. FlashAttention (and FlashAttention-2) pioneered an approach to speed up attention on GPUs by minimizing memory reads/writes, and is now used by most libraries to accelerate Transformer training and inference. This has contributed to a massive increase in LLM context length in the last two years, from 2-4K (GPT-3, OPT) to 128K (GPT-4), or even 1M (Llama 3).

DeepSpeed-FastGen Enhancements

❖ DeepSpeed-FastGen is an inference system framework that enables easy, fast, and affordable inference for large language models (LLMs). From general chat models to document summarization, and from autonomous driving to copilots at every layer of the software stack, the demand to deploy and serve these models at scale has skyrocketed. DeepSpeed-FastGen utilizes the Dynamic SplitFuse technique to tackle the unique challenges of serving these applications and offer higher effective throughput than other state-of-the-art systems.

SGLang

❖ Large Language Models (LLMs) are increasingly utilized for complex tasks that require multiple chained generation calls, advanced prompting techniques, control flow, and interaction with external environments. However, there is a notable deficiency in efficient systems for programming and executing these applications. To address this gap, we introduce SGLang, a Structured Generation Language for LLMs. SGLang enhances interactions with LLMs, making them faster and more controllable by co-designing the backend runtime system and the frontend languages.

Accelerating Generative AI with PyTorch

❖ Over the past year, generative AI use cases have exploded in popularity. Text generation has been one particularly popular area, with lots of innovation among open-source projects such as llama.cpp, vLLM, and MLC-LLM. While these projects are performant, they often come with tradeoffs in ease of use, such as requiring model conversion to specific formats or building and shipping new dependencies. This begs the question: how fast can we run transformer inference with only pure, native PyTorch?

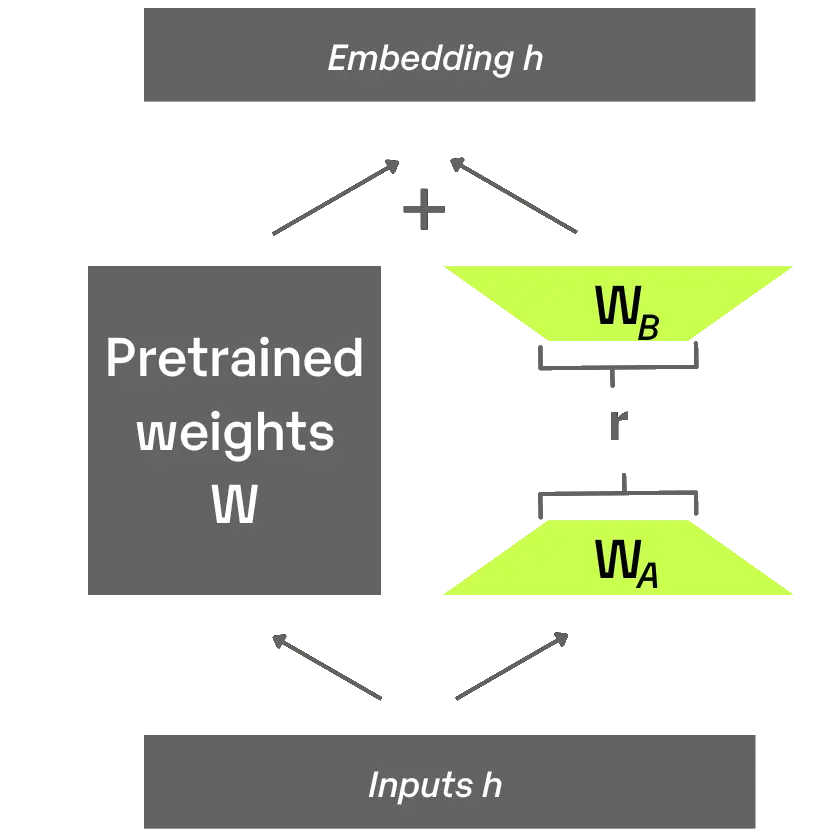

S-LoRA

❖ We introduce S-LoRA (code), a system designed for the scalable serving of many LoRA adapters. S-LoRA adopts the idea of Unified Paging for KV cache and adapter weights to reduce memory fragmentation. Heterogeneous Batching of LoRA computation with different ranks leveraging optimized custom CUDA kernels which are aligned with the memory pool design. S-LoRA TP to ensure effective parallelization across multiple GPUs, incurring minimal communication cost for the added LoRA computation compared to that of the base model.

vLLM v.s. DeepSpeed-FastGen

❖ vLLM matches DeepSpeed-FastGen’s speed in common scenarios and surpasses it when handling longer outputs. DeepSpeed-FastGen only outperforms vLLM in scenarios with long prompts and short outputs, due to its Dynamic SplitFuse optimization. This optimization is on vLLM’s roadmap. vLLM’s mission is to build the fastest and easiest-to-use open-source LLM inference and serving engine. It is Apache 2.0 and community-owned, offering extensive model and optimization support.

DeepSpeed-FastGen

❖ Large language models (LLMs) like GPT-4 and LLaMA have emerged as a dominant workload in serving a wide range of applications infused with AI at every level. While frameworks like DeepSpeed, PyTorch, and several others can regularly achieve good hardware utilization during LLM training, the interactive nature of these applications and the poor arithmetic intensity of tasks like open-ended text generation have become the bottleneck for inference throughput in existing systems.